Liz Reid, the Head of Google Search, has admitted that the corporate’s search engine has returned some “odd, inaccurate or unhelpful AI Overviews” after they rolled out to everybody within the US. The chief printed a proof for Google’s extra peculiar AI-generated responses in a weblog submit, the place it additionally introduced that the corporate has carried out safeguards that can assist the brand new characteristic return extra correct and fewer meme-worthy outcomes.

Reid defended Google and identified that a few of the extra egregious AI Overview responses going round, equivalent to claims that it is protected to depart canine in vehicles, are pretend. The viral screenshot exhibiting the reply to “What number of rocks ought to I eat?” is actual, however she stated that Google got here up with a solution as a result of a web site printed a satirical content material tackling the subject. “Prior to those screenshots going viral, virtually nobody requested Google that query,” she defined, so the corporate’s AI linked to that web site.

The Google VP additionally confirmed that AI Overview instructed folks to make use of glue to get cheese to stay to pizza based mostly on content material taken from a discussion board. She stated boards usually present “genuine, first-hand info,” however they may additionally result in “less-than-helpful recommendation.” The chief did not point out the opposite viral AI Overview solutions going round, however as The Washington Publish experiences, the expertise additionally instructed customers that Barack Obama was Muslim and that individuals ought to drink loads of urine to assist them go a kidney stone.

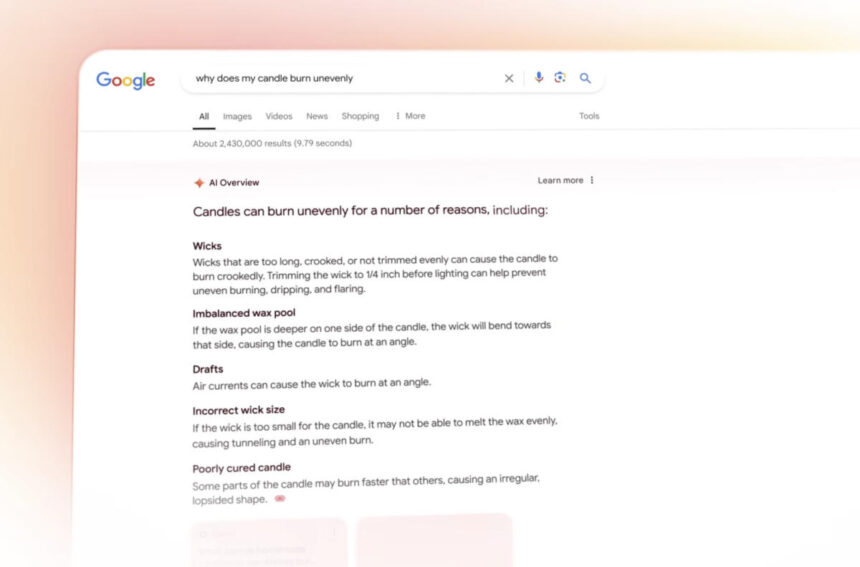

Reid stated the corporate examined the characteristic extensively earlier than launch, however “there’s nothing fairly like having tens of millions of individuals utilizing the characteristic with many novel searches.” Google was apparently in a position to decide patterns whereby its AI expertise did not get issues proper by examples of its responses over the previous couple of weeks. It has then put protections in place based mostly on its observations, beginning by tweaking its AI to have the ability to higher detect humor and satire content material. It has additionally up to date its techniques to restrict the addition of user-generated replies in Overviews, equivalent to social media and discussion board posts, which may give folks deceptive and even dangerous recommendation. As well as, it has additionally “added triggering restrictions for queries the place AI Overviews weren’t proving to be as useful” and has stopped exhibiting AI-generated replies for sure well being matters.